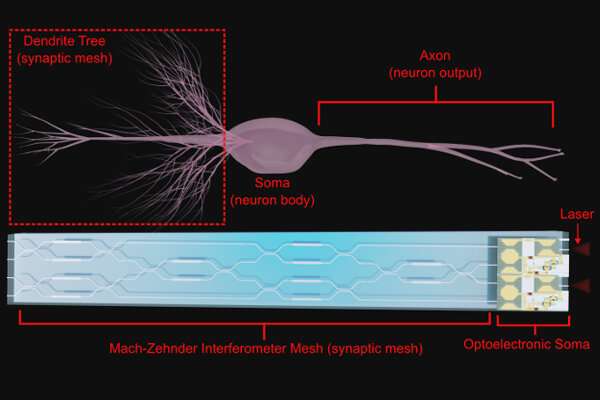

Experiments show “Cells that fire together wire together.” Credit: Luis El Srouji, Yun-Jhu Lee, Mehmet Berkay On, Li Zhang, and S. J. Ben Yoo

Perfect recall, computational wizardry and rapier wit: That's the brain we all want, but how does one design such a brain? The real thing is comprised of ~80 billion neurons that coordinate with one another through tens of thousands of connections in the form of synapses. The human brain has no centralized processor, the way a standard laptop does.

Instead, many calculations are run in parallel, and outcomes are compared. While the operating principles of the human brain are not fully understood, existing mathematical algorithms can be used to rework deep learning principles into systems more like a human brain would. This brain-inspired computing paradigm—spiking neural networks (SNN)—provides a computing architecture well-aligned with the potential advantages of systems using both optical and electronic components.

In SNNs, information is processed in the form of spikes or action potentials, which are the electrical impulses that occur in real neurons when they fire. One of their key features is that they use asynchronous processing, meaning that spikes are processed as they occur in time, rather than being processed in a batch like in traditional neural networks. This allows SNNs to react quickly to changes in their inputs, and to perform certain types of computations more efficiently than traditional neural networks.

SNNs are also able to implement certain types of neural computation that are difficult or impossible to implement in traditional neural networks, such as temporal processing and spike-timing-dependent plasticity (STDP), which is a form of Hebbian learning that allows neurons to change their synaptic connections based on the timing of their spikes. (Hebbian learning is summarized as "Cells that fire together wire together." It lends itself to math that models the plasticity of the brain's learning capacity.)

A recently published paper in the IEEE Journal of Selected Topics in Quantum Electronics describes the development of an SNN device leveraging the co-integration of optoelectronic neurons, analog electrical circuits, and Mach-Zehnder Interferometer meshes. These meshes are optical circuit components that can perform matrix multiplication, similar to the way synaptic meshes operate in the human brain.

The authors showed that optoelectronic neurons can accept input from an optical communication network, process the information through analog electrical circuits, and communicate back to the network through a laser. This process allows for faster data transfer and communication between systems than traditional electronic-only systems.

The paper also describes the use of existing algorithms, such as Random Backpropagation and Contrastive Hebbian Learning, to create brain-inspired computing systems. These algorithms allow the system to learn from information local to each synapse much like the human brain would, providing significant advantages in computing performance over traditional machine learning systems that use backpropagation.

In relation to AI and machine learning, SNNs provide several advantages over modern computing paradigms for tasks that mimic the conditions in which they naturally evolved. Because SNNs process data over time in a continuous manner, they are well-suited to applications situated in real-time environments with single inference and learning instances presented at a time (such as event-based signal processing).

In addition, the spread of information over time allows multiple forms of memory at different time scales, like the human distinction between working, short-term, and long-term memories. Neuromorphic sensing and robotics are common applications of SNNs; for example, an adaptive robotic arm controller can provide reliable motor control as actuators wear down.

More speculatively, future devices might exploit these properties in the context of live audio and natural language processing for voice assistants, live-captioning services, or audio separation; similarly, SNNs can be used for live video and lidar processing in autonomous vehicles or surveillance systems.

More information: Luis El Srouji et al, Scalable Nanophotonic-Electronic Spiking Neural Networks, IEEE Journal of Selected Topics in Quantum Electronics (2022). DOI: 10.1109/JSTQE.2022.3217011

Provided by Institute of Electrical and Electronics Engineers