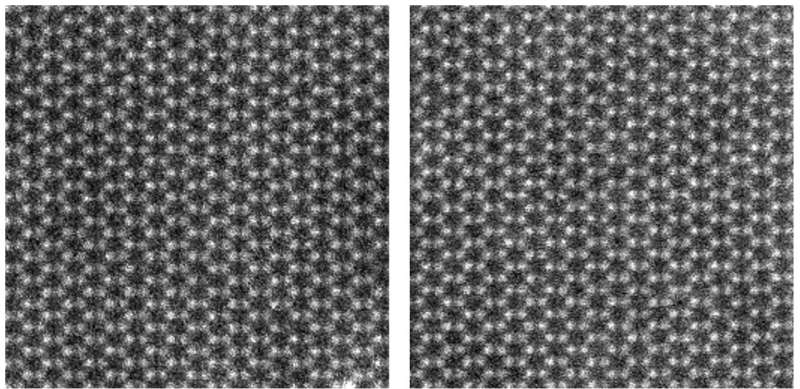

Two microscope images of a material surface. The left image was generated by the researchers' new AI, and the right image was taken by a microscope. Huang noted that the AI is "so good it fools me and my colleagues." Credit: The Grainger College of Engineering at the University of Illinois Urbana-Champaign

The same AI technology used to mimic human art can now synthesize artificial scientific data, advancing efforts toward fully automated data analysis.

Researchers at the University of Illinois Urbana-Champaign have developed an AI that generates artificial data from microscopy experiments commonly used to characterize atomic-level material structures. Drawing from the technology underlying art generators, the AI allows the researchers to incorporate background noise and experimental imperfections into the generated data, allowing material features to be detected much faster and more efficiently than before.

The study, "Leveraging generative adversarial networks to create realistic scanning transmission electron microscopy images," was published in the journal npj Computational Materials.

"Generative AIs take information and generate new things that haven't existed before in the world, and now we've leveraged that for the goal of automated data analysis," said Pinshane Huang, a U. of I. professor of materials science and engineering and a project co-lead. "What is used to make paintings of llamas in the style of Monet on the internet can now make scientific data so good it fools me and my colleagues."

Other forms of AI and machine learning are routinely used in materials science to assist with data analysis, but they require frequent, time-consuming human intervention. Making these analysis routines more efficient requires a large set of labeled data to show the program what to look for. Moreover, the data set needs to account for a wide range of background noise and experimental imperfections to be effective, effects that are difficult to model.

Since collecting and labeling such a vast data set using a real microscope is infeasible, Huang worked with U. of I. physics professor Bryan Clark to develop a generative AI that could create a large set of artificial training data from a comparatively small set of real, labeled data. To achieve this, the researchers used a cycle generative adversarial network, or CycleGAN.

"You can think of a CycleGAN as a competition between two entities," Clark said. "There's a 'generator' whose job is to imitate a provided data set, and there's a 'discriminator' whose job is to spot the differences between the generator and the real data. They take turns trying to foil each other, improving themselves based on what the other was able to do. Ultimately, the generator can produce artificial data that is virtually indistinguishable from the real data."

By providing the CycleGAN with a small sample of real microscopy images, the AI learned to generate images that were used to train the analysis routine. It is now capable of recognizing a wide range of structural features despite the background noise and systematic imperfections.

"The remarkable part of this is that we never had to tell the AI what things like background noise and imperfections like aberration in the microscope are," Clark said. "That means even if there's something that we hadn't thought about, the CycleGAN can learn it and run with it."

Huang's research group has incorporated the CycleGAN into their experiments to detect defects in two-dimensional semiconductors, a class of materials that is promising for applications in electronics and optics but is difficult to characterize without the aid of AI. However, she observed that the method has a much broader reach.

"The dream is to one day have a 'self-driving' microscope, and the biggest barrier was understanding how to process the data," she said. "Our work fills in this gap. We show how you can teach a microscope how to find interesting things without having to know what you're looking for."

More information: Abid Khan et al, Leveraging generative adversarial networks to create realistic scanning transmission electron microscopy images, npj Computational Materials (2023). DOI: 10.1038/s41524-023-01042-3